HDFSConnector

The HDFSConnector component allows user to interact with the Hadoop Distributed File System (HDFS). The component can be used to read and write files to HDFS.

Configuration

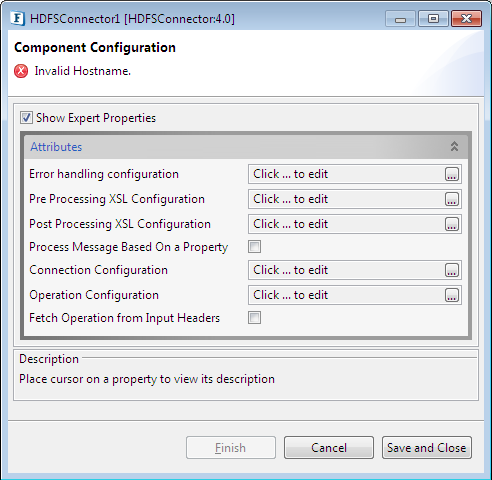

The HDFSConnector component can be configured using its Custom Property Sheet (CPS) wizard.

Figure 1: Component Configuration properties in the HDFSConnector CPS

Attributes

Connection Configuration

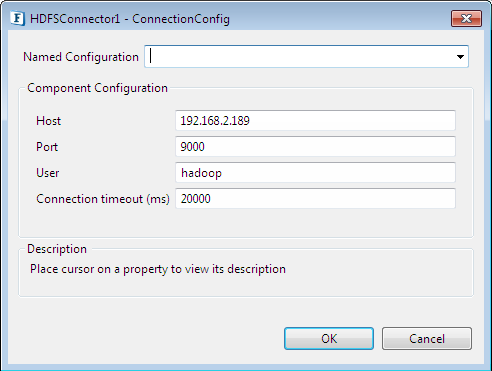

Figure 2: Connection Configuration properties

Host

The name or address of the machine on which Hadoop namenode server runs.

Port

The port on which the above server runs.

User

User that owns the folder in Hadoop cluster on which operations are performed.

Connection timeout (ms)

Connection timeout value, in milliseconds.This value is used when the component is not able to connect to the server when an attempt to connect is made. It waits for the configured time to check whether a connection could be made in that interval.

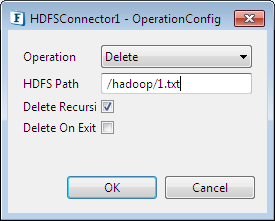

Operation Configuration

Click Operation Configuration ellipsis button to configure the operations to be performed by the component.

Figure 3: Operation Configuration properties

Operation

Operation to be performed on HDFS.

Operations that are supported by the component are:

- Write

- Append

- Copy From Local To HDFS

- Copy From HDFS To Local

- Read

- Delete

HDFS Path

Absolute file path in HDFS for the corresponding operation; this path is mandatory for all the operations.

Local Path

Absolute file path in local file system used for operations CopyFromLocalToHDFS and CopyFromHDFSToLocal.

Below properties appear only for Delete operation:

Delete Recursively

If enabled, folders and subfolders will be deleted recursively when using Delete operation.

Delete on Exit

If enabled, files will not be deleted immediately but when the component is stopped when using Delete operation.

Fetch Operation from Input Headers

If this option is enabled, the schema will not be used and operation will be fetced from Input Header. If this option is enabled, the operation and path details configured in the component are over-written with values fetched from input message headers. Otherwise, they will be over-written with values fetched from input XML.

Inputs and Outputs

This section gives a few sample inputs and their corresponding outputs using the Schema as available below.

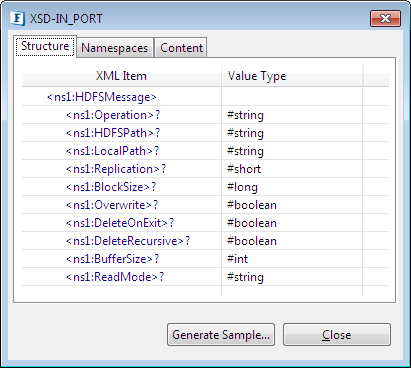

Schema

If the “Fetch Operation from Input Headers” option is enabled, the schema will not be used. In this case, headers with same names as elements mentioned below can be used to specify values.

Figure 4: Input Schema

Replication: Toggles Replication on or off.

Block Size: Sets the required block size.

Overwrite: Toggles the overwrite function.

Buffer Size: Sets the requisite buffer size.

Read Mode: Decides the mode in which the text is read, it can be read as bytes or text. Toggle between them by writing the words Byte/Text.

Descriptions for the following properties are explained under Operation Configuration section above:

- Operation

- HDFS Path

- Local Path

- Delete on Exit

- Delete Recursive

Samples

Below are a few sample inputs using the schema and their corresponding outputs.

Delete

Sample Input

<ns1:HDFSMessage xmlns:ns1="http://www.fiorano.com/fesb/HDFSConnector/In">

<ns1:Operation>Delete</ns1:Operation>

<ns1:HDFSPath>/user/hadoop/blue.txt</ns1:HDFSPath>

<ns1:DeleteOnExit>false</ns1:DeleteOnExit>

<ns1:DeleteRecursive>false</ns1:DeleteRecursive>

</ns1:HDFSMessage>

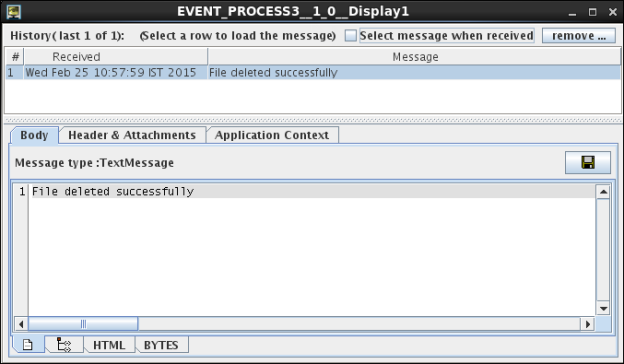

Output

If completed successfully, the following message will appear in the Display window (when Display component is connected to theHDFSConnector).

Figure 5: Delete Output

Copy From Local To HDFS

Sample Input

<ns1:HDFSMessage xmlns:ns1="http://www.fiorano.com/fesb/HDFSConnector/In">

<ns1:Operation>CopyFromLocalToHdfs</ns1:Operation>

<ns1:HDFSPath>/user/hadoop</ns1:HDFSPath>

<ns1:LocalPath>/user/hadoop/blue.txt</ns1:LocalPath>

<ns1:Overwrite>false</ns1:Overwrite>

</ns1:HDFSMessage>

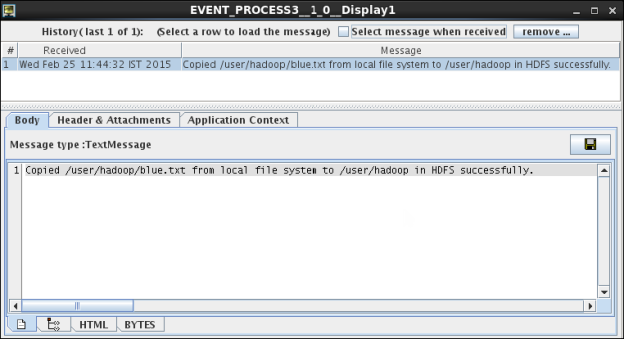

Output

Figure 6: Copy from Local to HDFS Output

Write

Sample Input

<ns1:HDFSMessage xmlns:ns1="http://www.fiorano.com/fesb/HDFSConnector/In">

<ns1:Operation>Write</ns1:Operation>

<ns1:HDFSPath>/user/hadoop/blue1.txt</ns1:HDFSPath>

<ns1:Replication>1</ns1:Replication>

<ns1:BlockSize>33554432</ns1:BlockSize>

<ns1:Overwrite>false</ns1:Overwrite>

<ns1:BufferSize>4096</ns1:BufferSize>

</ns1:HDFSMessage>

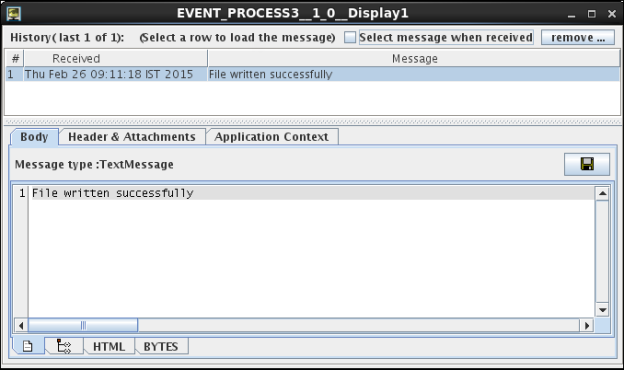

Output

Figure 7: Write Output

Copy From HDFS To Local

Sample Input

<ns1:HDFSMessage xmlns:ns1="http://www.fiorano.com/fesb/HDFSConnector/In">

<ns1:Operation>CopyFromHdfsToLocal</ns1:Operation>

<ns1:HDFSPath>/user/hadoop/blue.txt</ns1:HDFSPath>

<ns1:LocalPath>/user/hadoop</ns1:LocalPath>

</ns1:HDFSMessage>

Output

Figure 8: Copy from HDFS to Local Output

Read

Sample Input

<ns1:HDFSMessage xmlns:ns1="http://www.fiorano.com/fesb/HDFSConnector/In">

<ns1:Operation>Read</ns1:Operation>

<ns1:HDFSPath>/user/hadoop/blue.txt</ns1:HDFSPath>

<ns1:BufferSize>-434169494</ns1:BufferSize>

<ns1:ReadMode>Text</ns1:ReadMode>

</ns1:HDFSMessage>

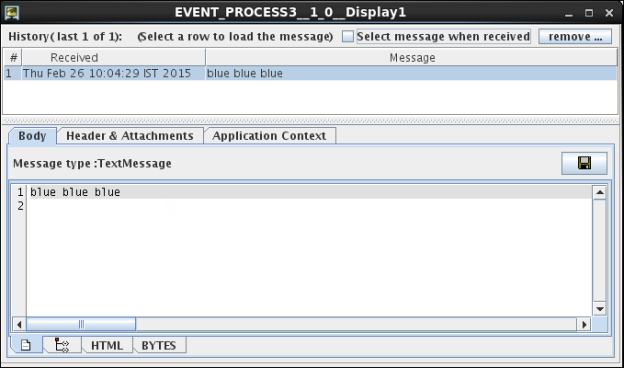

Output

Figure 9: Read Output

Append

Sample Input

<ns1:HDFSMessage xmlns:ns1="http://www.fiorano.com/fesb/HDFSConnector/In">

<ns1:Operation>Append</ns1:Operation>

<ns1:HDFSPath>/user/hadoop/blue.txt</ns1:HDFSPath>

<ns1:BufferSize>4096</ns1:BufferSize>

</ns1:HDFSMessage>

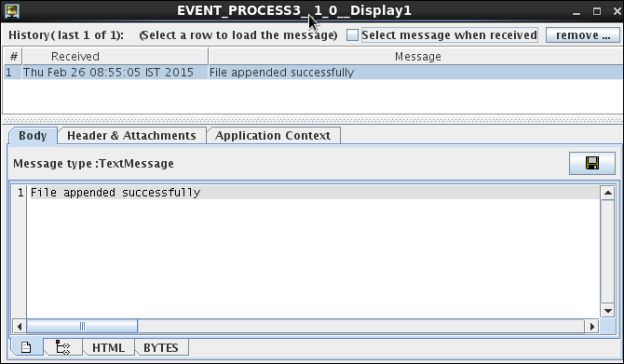

Output

Figure 10: Append Output

To run HDFSConnector component in a Windows machine, the path of ‘winutils.exe’ should be specified as system property ‘hadoop.home.dir’ in runtime arguments of the component. To specify this, navigate to Service Instance Properties > Runtime Arguments > JVM_PARAMS and add '-Dhadoop.home.dir=<path of folder where winutils.exe is present’ to already existing JVM parameters.